What is Docker and What is it used for?

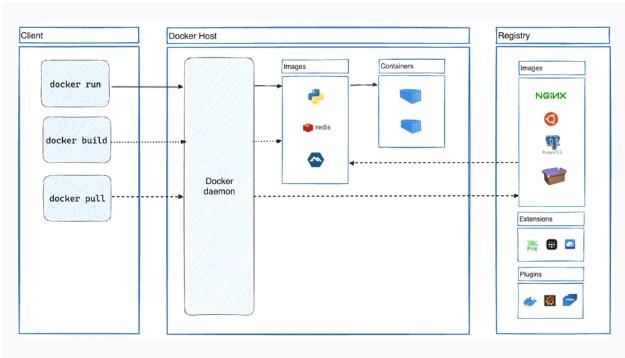

In the ever-evolving world of technology, the way we develop, deploy, and manage applications has undergone a significant transformation. When we talk about traditional software development and deployment methods, it generally leads to complex, time-consuming, and error-prone processes. Developers must ensure that their applications run across different environments from development to production, which can be tough sometimes. This is where Docker, a revolutionary containerization technology, comes into action. It has become a game changer in the world of software development and deployment. It simplifies the process and enables a more efficient and consistent approach. This makes it a crucial tool in the CCIE DevNet community. In this blog, we will discuss what it is, its history, what Docker is used for, its functioning, and its architecture, and in the end, we will look into the advantages associated with it. Whether you’re a developer, DevOps engineer, or IT professional, understanding Docker and its capabilities is crucial for staying competitive in the rapidly evolving tech landscape. If you’re interested in expanding your knowledge and skills in this area, the DevNet Expert Training offered by PyNet Labs is an excellent resource to consider. Before getting into more details, let’s first understand what it really is. Docker is an open-source platform that allows the development, deployment, and management of applications with the help of containerization. Now, when we talk about containers, these are lightweight, standalone, and executable software packages that include everything necessary to run an application, including the code, runtime, system tools, and libraries. This approach differs from traditional virtualization, where each VM (Virtual Machine) runs a complete OS, which results in a more resource-intensive and less efficient process. Now, many will think that it is different from the traditional approach. The answer is that docker containers share the host operating system’s kernel, which makes them more lightweight and portable. With the help of this, applications can now easily be packaged and deployed across different environments without the need for any complex configurations. Now that we have a basic understanding. But the question that arises now is how it comes into existence, i.e., its history. Let’s discuss it! Docker, or the 0.1 version, was first introduced in 2013 by Solomon Hykes at dotCloud. The idea behind it, was to create a more efficient as well as scalable way to develop, package, and deploy applications. Before this technology, the whole industry totally relied on VMs in order to achieve similar goals, but the problems associated with it were the complex, resource-intensive, and difficult to manage nature. The initial release of Docker was a breakthrough, as it provided a simple and intuitive way to package and deploy applications using containers. In the year 2014, its version 1.0 was released which solidified its position as a reliable and mature platform for containerization. After consecutive years, it has gone through various updates, but the major update came in the year 2018 when Docker released its Enterprise Edition, and in the year 2019, it was acquired by Mirantis (a Cloud Computing company). The success of it can be attributed to its ability to simplify the development, deployment, and management of applications, as well as its strong community support and the ecosystem of tools and services that have been built around it. Before getting into its functioning, let’s first understand what is Docker used for. It has a wide range of applications and use cases. Below, we have discussed some of these – Let’s now understand its functioning. It works by following the concept of containerization and this assists applications and their dependencies in being packaged into a single, portable, and self-contained unit called a container. This container can then be easily deployed and run on any system that has Docker installed without the need for any complex configuration. The key components that assist in the functioning are: The process of using it typically involves the following steps: We now understand its working in detail, let’s discuss its architecture along with its components. Its architecture is based on a client-server model, where the Docker client communicates with the daemon (the Docker Engine) to perform various operations. The key components of its architecture are: Note: The architecture is designed to be modular and extensible, allowing for the integration of various third-party tools i.e., extensions and services to extend the functionality of the platform. Let’s now move on to the next section i.e., Kubernetes vs Docker. Below, we have discussed the common difference between the two based on different factors in a tabular form. We now have detailed information regarding Docker; let’s discuss the different advantages associated with it. When we talk about its advantages, there are many that make it a widespread adoption in the software development and deployment industry. Let’s discuss some of these advantages. Docker is a platform with which developers can easily package applications and also their dependencies into a container. Now, if we talk about why it is used, it simplifies application deployment and, side-by-side, ensures a consistent runtime environment across different systems. When we talk about Docker, it is a containerization platform whereas Kubernetes is a container orchestration system. Kubernetes manages as well as scales its containers across multiple hosts, which further assist in offering advanced features for deployment as well as management of containerized applications. No, Kubernetes does not require Docker to run. Kubernetes is a container orchestration platform that can work with various container runtimes, including Docker, container, and CRI-O. No, Docker is not a virtual machine. It makes use of a containerization approach in order to package and run applications in a more lightweight and efficient manner. Docker has revolutionized the way we develop, package, and deploy applications. By providing a containerization platform that addresses the challenges of traditional software deployment, it has become an essential tool in the modern software development ecosystem. In this blog, we have discussed it, what is it used for, Its history, workflow, components, and its advantages.Introduction

What is Docker?

History of Docker

What is Docker Used For?

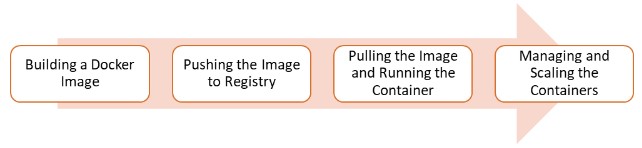

How Does Docker Work?

Docker Architecture

Difference Between Kubernetes and Docker

Factor Kubernetes Docker Purpose Orchestration and management of containerized applications Packaging and deployment of containerized applications Scope Cluster-level management of containers Container-level management Focus Scalability, high availability, and fault tolerance Containerization and image management Architecture Distributed, with a master-worker node structure Centralized, with a client-server architecture Deployment Across multiple hosts and cloud environments Primarily on a single host Networking Provides advanced networking features for container communication Offers basic networking capabilities for container connectivity Storage Supports various storage solutions for persistent data Relies on volumes for data storage Advantages of Docker

Frequently Asked Questions

Q1 – What is Docker and why it is used?

Q2 – What is Docker vs Kubernetes?

Q3 – Can Kubernetes run without Docker?

Q4 – Is Docker a virtual machine?

Conclusion